I. Introduction to Docker and Containerization.

Welcome to my blog on Docker architecture! Docker is a powerful tool that allows developers to package their applications and dependencies into lightweight, portable containers. These containers can then be easily deployed and run on any platform that supports Docker. In this blog, we will be taking a closer look at how Docker works and how it can be used to streamline software development and deployment. We will also be discussing the various benefits of using Docker, including increased efficiency and consistency across environments. Whether you are new to Docker or just looking to refresh your understanding of its core concepts, I hope that this blog will provide a solid foundation for your continued learning and exploration of Docker. So, let's get started!

II. Docker Architecture Overview.

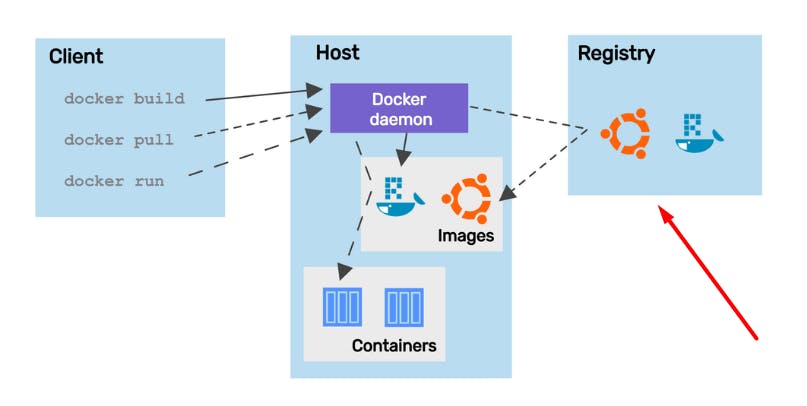

Docker is a powerful tool that allows developers to package and deploy their applications in containers. But what exactly is the Docker architecture and how does it work? In this section, we will provide an overview of the key components that make up the Docker architecture.

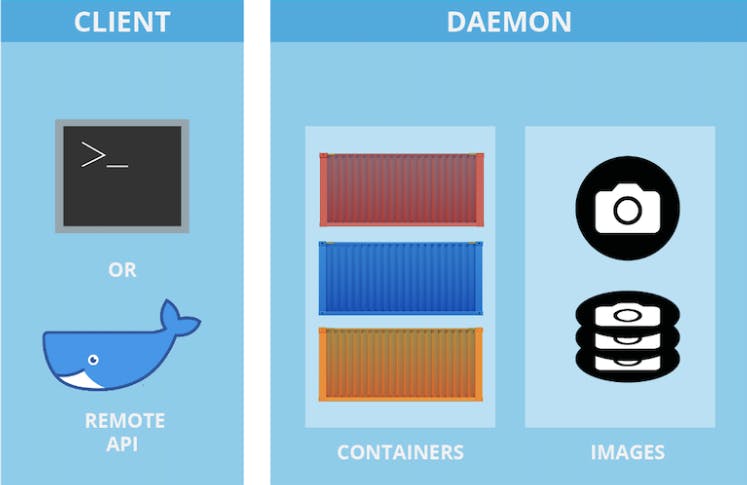

The Docker client is the interface that users interact with when working with Docker. It is responsible for sending commands to the Docker daemon, which is the background process that actually executes those commands. The Docker daemon is also responsible for managing images, containers, and networking, and interacting with other daemons as needed.

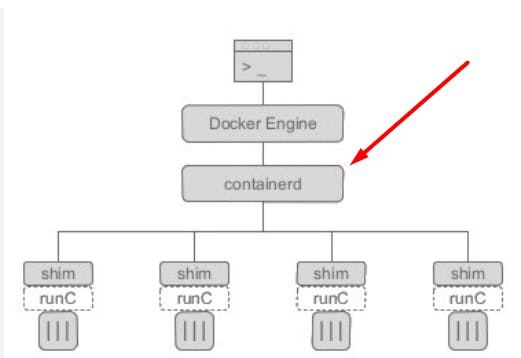

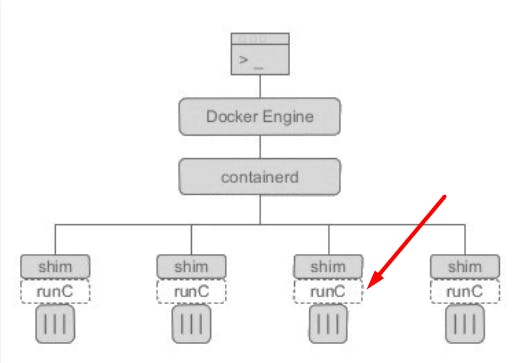

Under the hood, the Docker daemon relies on a number of other tools to manage containers. One of these tools is containerd, which is a daemon that manages the lifecycle of containers. containerd is responsible for tasks such as starting, stopping, and deleting containers, as well as managing their networking and storage.

Another important component of the Docker architecture is runc, which is a command-line tool that is used to create and run containers. runc is responsible for setting up the container's environment and executing the container's processes.

Finally, the Docker registry is a place where users can store and share Docker images. There are public registries like Docker Hub, as well as private registries that can be set up by organizations for internal use. The Docker daemon communicates with the registry to pull and push images as needed.

In summary, the Docker architecture consists of the Docker client, the Docker daemon, containerd, runc, and the Docker registry, all working together to enable the creation and management of Docker containers. In the next section, we will delve deeper into the concepts of Docker images and containers, and how they fit into this architecture.

III. Docker Images and Containers.

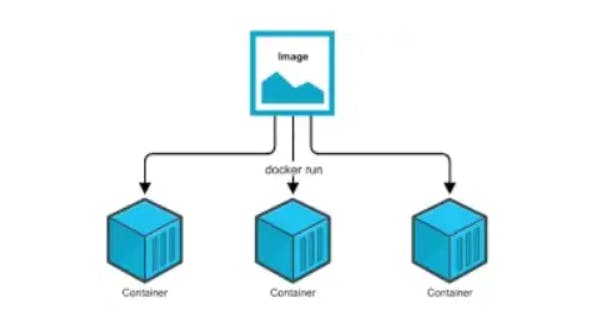

One of the key concepts in Docker is the distinction between images and containers. It's important to understand the differences between these two concepts, as they play different roles in the Docker ecosystem.

Docker images are templates that are used to create Docker containers. They contain all of the necessary code, libraries, and dependencies needed to run an application. Images are typically built using a series of instructions, known as a Dockerfile, which specify the steps needed to build the image.

On the other hand, Docker containers are running instances of Docker images. They are created from images and contain a fully-fledged application and its dependencies. Containers are lightweight and portable, making them easy to deploy and run on any platform that supports Docker.

To create a Docker container, you first need to create a Docker image. This can be done using the docker build command, which takes a Dockerfile as input and produces an image as output. Once you have an image, you can then use the docker run command to create a new container based on that image.

Once a container is running, you can use various Docker commands to manage and manipulate it. For example, you can use the docker stop command to stop a running container or the docker rm command to delete a container.

In summary, Docker images are used to create Docker containers, which are lightweight and portable instances of running applications. Understanding the differences between these two concepts is crucial for working with Docker effectively. In the next section, we will delve deeper into the topic of Docker networking and storage, and how these concepts relate to images and containers.

IV. Docker Networking and Storage.

Docker is a tool that helps developers package and deploy their applications in containers. Containers are lightweight and portable, making it easy to deploy and run applications consistently across environments. Docker also provides options for networking and storage that allow you to manage and configure the networking and storage of your containers.

Networking options in Docker allow you to connect your containers to other networks or to the host network. This can be useful if you want to access services running in your containers from the host or from other hosts on the network. Docker provides several ways to connect your containers to networks, including using the --network flag when starting a container and using the docker network command to create and manage custom networks.

Docker also provides options for managing data in your containers. One option is volume mounting, which allows you to mount a directory on the host system as a volume inside the container. This is useful for preserving data across container restarts and for sharing data between containers. You can use the -v flag when starting a container to specify the volume to mount.

Another option for managing data in Docker is bind mounting, which allows you to mount a file or directory from the host system directly into the container. This is similar to volume mounting, but the data is not stored in a separate volume and is instead directly accessed from the host system.

Finally, you can use data volume containers to manage data in your containers. A data volume container is a special type of container that is used solely for storing data. Data volume containers can be shared between multiple containers, and their data is persisted even if the data volume container is deleted.

In summary, Docker provides options for networking and storage that allow you to manage and configure the networking and storage of your containers. These options include volume mounting, bind mounting, and data volume containers. Understanding and using these options effectively can help you manage data in your Docker containers.

V. Advanced Docker Features.

Docker is a powerful tool that provides a number of advanced features for managing and deploying multi-container applications. In this section, we will take a look at two of these advanced features: Docker Compose and Docker Swarm.

Docker Compose is a tool that allows you to define and run multi-container applications. It uses a configuration file, known as a docker-compose.yml file, to specify the services that make up your application and how they should be deployed. With Docker Compose, you can easily define and manage complex applications that are made up of multiple containers.

Docker Swarm is a tool that allows you to create and manage a cluster of Docker nodes. A node is a machine that is running Docker and is part of the cluster. With Docker Swarm, you can easily deploy and scale your applications across multiple nodes in the cluster. Docker Swarm also provides features for load balancing and failover, which can help ensure the availability of your applications.

In summary, Docker Compose and Docker Swarm are advanced features that allow you to manage and deploy multi-container applications. Docker Compose makes it easy to define and run complex applications, while Docker Swarm allows you to create and manage clusters of Docker nodes and deploy applications across them. Understanding and using these features can help you take your Docker skills to the next level.

VI. Conclusion.

In this blog post, we covered the different components of Docker's architecture and how they work together to enable containerization. We also discussed the benefits of using Docker in software development and deployment, as well as advanced features and best practices for working with Docker. To summarize, Docker is a powerful tool that allows developers to package and deploy their applications in lightweight and portable containers. The Docker architecture consists of the Docker client, daemon, containerd, runc, and registry, all working together to manage Docker containers. Additionally, Docker offers advanced features like Docker Compose and Swarm to manage and deploy multi-container applications.

The future of software development and deployment looks bright for Docker and containerization. As more companies adopt containerization to streamline their processes, the demand for Docker expertise will likely increase. This blog has provided a helpful introduction to Docker architecture and its components for both beginners and experienced users.

VII. Additional Resources.

If you're interested in learning more about Docker architecture and its various components, there are several resources available online that can provide additional information and guidance. Here are a few links to check out:

The video titled "Docker for Beginners" on the TechWorld with Nana YouTube channel is a great resource for those who are new to Docker and want to learn more about how it works. In this video, the presenter provides a detailed introduction to Docker and its various components, including the Docker client, the Docker daemon, and the Docker registry. The video also covers key concepts like Docker images and containers, as well as advanced features like networking and storage.

https://www.youtube.com/watch?v=3c-iBn73dDE&t=1s&ab_channel=TechWorldwithNana

Kunal Kushwaha's - Docker Tutorial for beginners - https://www.youtube.com/watch?v=17Bl31rlnRM&t=4566s&ab_channel=KunalKushwaha

The Docker documentation (docs.docker.com/get-started) is a comprehensive resource for learning about Docker and containerization.